Recent Ethical Concerns Surrounding AI in Radiology

Includes an interview with the director of breast imaging at Valley Hospital in Paramus NJ

AI IN HEALTHCARE

11/12/20254 min read

While the concept of artificial intelligence (AI) in the field of healthcare is relatively old, dating back to the 1960s, its development has accelerated as society adopts a more positive outlook toward the technology. It's been revolutionizing drug development by helping discover lead compounds, new biomarkers and potential anomalies.

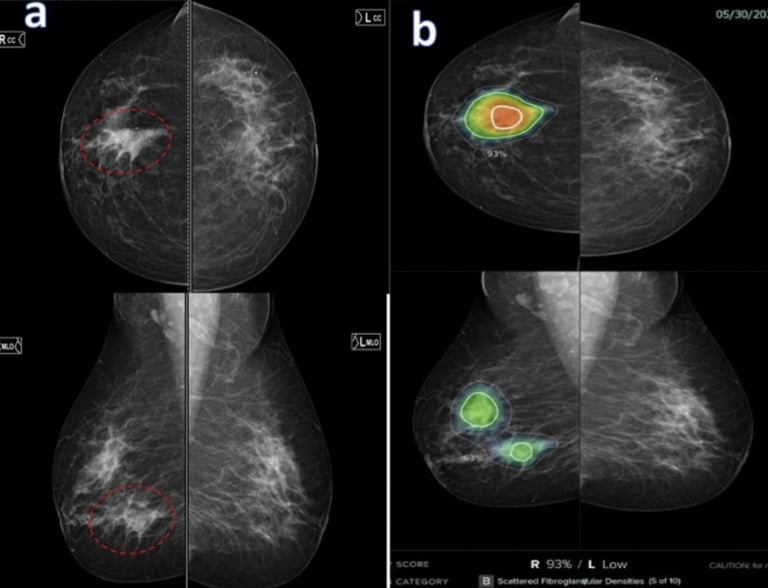

This growing popularity of AI in medicine has also raised many concerns within the medical industry, particularly in the field of medical screening. Given its efficiency and potential to advance image acquisition, medical imaging is a field that has been using AI extensively. According to an article titled Redefining Radiology: A Review of Artificial Intelligence Integration in Medical Imaging, published under the National Institute of Health (NIH), AI has technically been used pretty early on in the field of medical screening through computer aided detection (CAD) since its approval by the FDA in 1998. CAD is an advanced software that helps doctors identify potential abnormalities in mammography screenings. By improving disease detection, image segmentation, and streamlined workflows, AI integration in CAD makes cancer and disease detection more efficient.

There has been an influx of debate surrounding ethical issues that arise when switching to an AI lead. For example, according to an NIH article titled Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility?, one of the biggest concerns is algorithmic bias regarding the perpetuation of disparities. AI algorithms tend to overrepresent certain patient demographics, whether based on race, gender, or other patient characteristics. This results in AI having considerably lower accuracy when assessing conditions contracted by minority groups who are not as represented in algorithms.

Generally speaking, this concern reflects a prominent flaw in AI: the black box problem. While it receives inputs and produces outputs, how AI arrives at its outputs is often challenging to identify. This means that even the developers of the AI software, such as CAD, may not be able to sufficiently explain how it came to a particular conclusion regarding patients’ diagnoses.

To assess whether the full adoption of AI compares to how the field of medical imaging previously functioned (or could function) without AI assistance, a guided interview was conducted with the head of breast imaging at Valley hospital. Dr. Elina Zaretsky MD is a breast imaging radiologist who is engaged in evaluating current research regarding AI’s integration in breast imaging. She recently gave a presentation to healthcare professionals during a multidisciplinary conference on this subject.

Interview with Dr. Elina Zaretsky M.D.

How do you make sure AI tools used in breast imaging work equally well for all patients, including those in underrepresented demographics?

“It is very hard because the AI model is trained on a population. You train an AI model by taking a bunch of patient mammograms and giving them to an AI system. The AI system then does some deep learning to come up with patterns that will say that this particular mass is a cancer and this particular mass is not. However, the AI system is trained on live mammograms from live patients. Now, if you use a thousand patients from one state, they may not be representative of a thousand patients from a different state. They may be representative of a lower or higher socioeconomic status, and visa versa. It could also be according to other demographics, such as trace and religion. It is a challenge for AI systems to be trained on representative patient populations and not just a subset of a very wide population. As we know, breast cancer is more prevalent in certain groups than other groups. African Americans tend to have worse prognoses, and they're also likely to have more aggressive cancers and diagnoses versus other people. Ashkenanzi jewish people also have more predisposition to breast cancer than other populations. In my talk, I discussed how we cannot generalize AI interpretation unless we know that the system is trained on a representative cohort of patients.”

How do you handle the lack of transparency in AI systems when making clinical decisions?

“There are different ways that we try to address this and one of them is integrating something called ‘explainable AI.’ This tells us how the AI system came up with the interpretation that it did. But you're right, it is very hard for a breast imager to believe ai when they don't know how it came up with that interpretation. If there is a density on the mammogram, and that AI flags this density as suspicious, a radiologist may say it does not look suspicious and dismiss the finding. However, if the radiologist knew that AI came up with this density as being suspicious because there is an irregular margin and it points to where that margin is, the radiologist is more likely to believe and accept this interpretation.”

If an AI makes an error in diagnosis, who should be responsible?

“As of right now, if there is a clinical error made by a physician that potentially results in patient harm, that physician can be sued for malpractice. However, in the new landscape of AI being able to read radiology images with no oversight with the clinician, it becomes a challenge in terms of liability. would the doctor assume liability, or would the AI company assume liability? Therefore, it probably behooves for the patient's safety that at least the radiologist still has some type of oversight over the AI systems.”

Do AI’s benefits in breast imaging outweigh its risks, or is human judgment still better?

“I think AI can be used as a very important aid to breast radiologists given the fact that there is a national shortage of radiologists, specifically breast radiologists. The number of studies being done is very high, so it is imperative that radiologists get assistance in interpretation. However, you still need clinical judgement because the doctor is the one that puts together not just an image, but is able to combine the clinical information with the imaging to make a final interpretation. The AI system may not take into consideration the clinical information of the patient. For instance, if a patient comes in with a lump and there's something on the skin that looks like an infection, the AI system, because it has very irregular margins on the imaging, may say that that's a patient. However, the clinical judgment of the radiologist will say that even though it looks irregular on the ultrasound, the patient looks like they have more of an infection.”